Available in four versions, the OpenELM language model is Apple's first open source approach to generative artificial intelligence. Will the brand, accustomed to secrecy, approach a different strategy in the face of OpenAI?

Will the future of generative artificial intelligence be open source? Two visions coexist within Silicon Valley.

Advertisement

On one side there are groups like OpenAI and Google which develop proprietary models, and on the other side companies like Meta which, for the moment, prefer to opt for open solutions. In France, Mistral and Kyutai also rely heavily on openness, even if Mistral's latest model is not completely open source, due to high ambitions which have an impact on the startup's strategy.

And Apple in all this? The Californian giant, whose entry into the generative AI sector is long overdue (it should unveil its strategy on June 10, with iOS 18), is used to closure and secrecy.

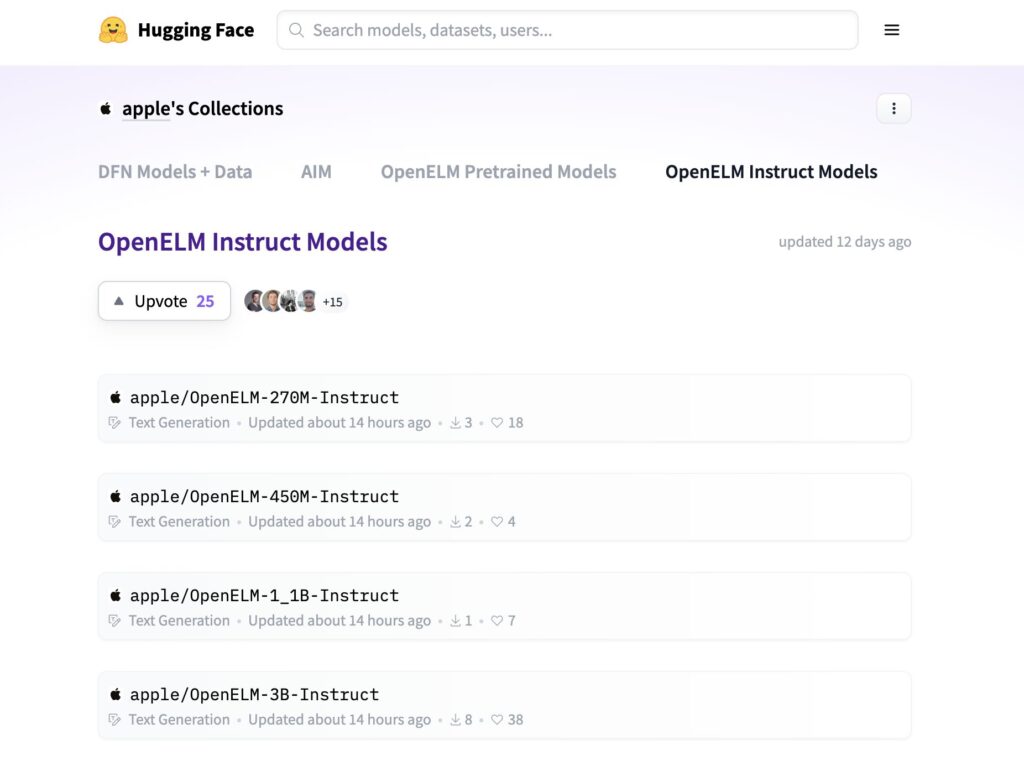

However, and to everyone's surprise, the brand has just published in the HuggingFace library four versions of an unknown language model, called OpenELM. This is an open source large language model (LLM), part of the code of which is also available on GitHub.

Apple and AI: an inevitable open source approach?

Apple is not reluctant to open source since it regularly publishes the source codes of its old operating systems on a dedicated page. WebKit (Safari's engine), Swift (its programming language) and ResearchKit (an API for medical research) are also freely accessible.

Advertisement

In the generative AI sector, Apple has published several works in recent months, often on Github. Aglet, Llarp, Mgie… These models are intended for researchers and aim to prove that Apple is not completely left behind by the competition, since it also trains machines to recognize images and generate text. It remains to be seen how these models will be used in iOS and macOS.

With OpenELM, Apple is undoubtedly giving a first glimpse of what is to come in its future operating systems. Available in 4 configurations (270 million parameters, 450 million, 1 billion and 3 billion), OpenELM is a “small” large language model. The 70 billion parameters of LLaMA-3, while waiting for the version of the model with 400 billion parameters, makes a mockery of Apple's model. It's easy to guess where Apple is heading: OpenELM will run locally, without the need to connect to Apple's servers.

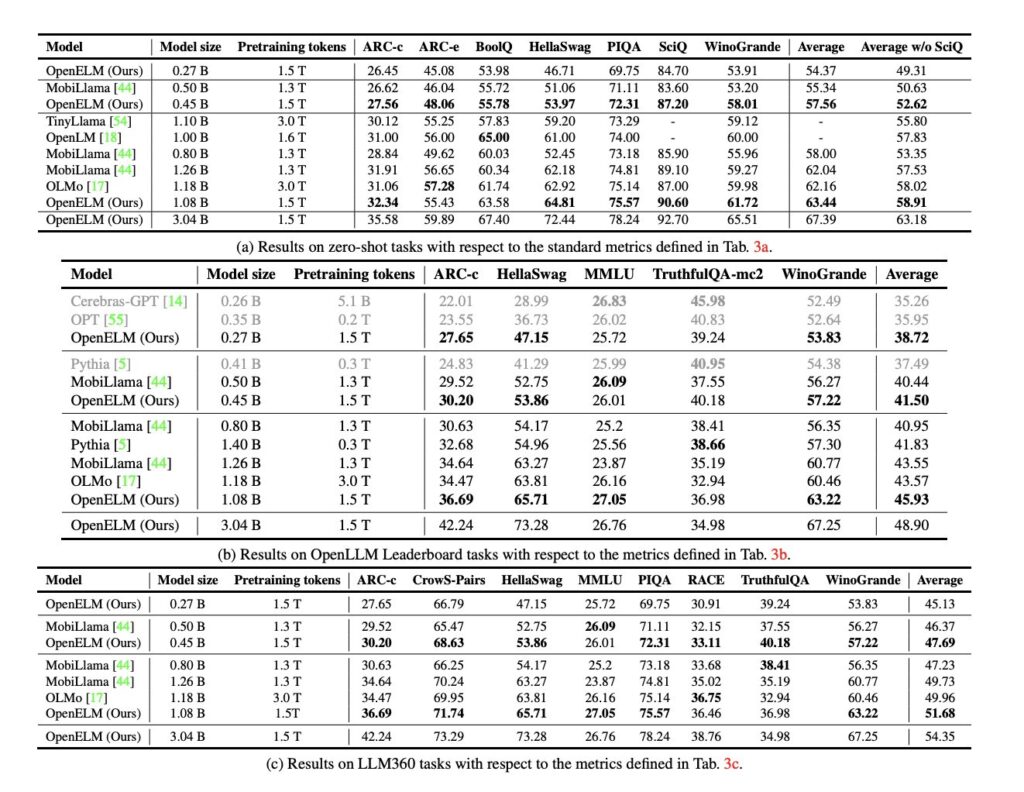

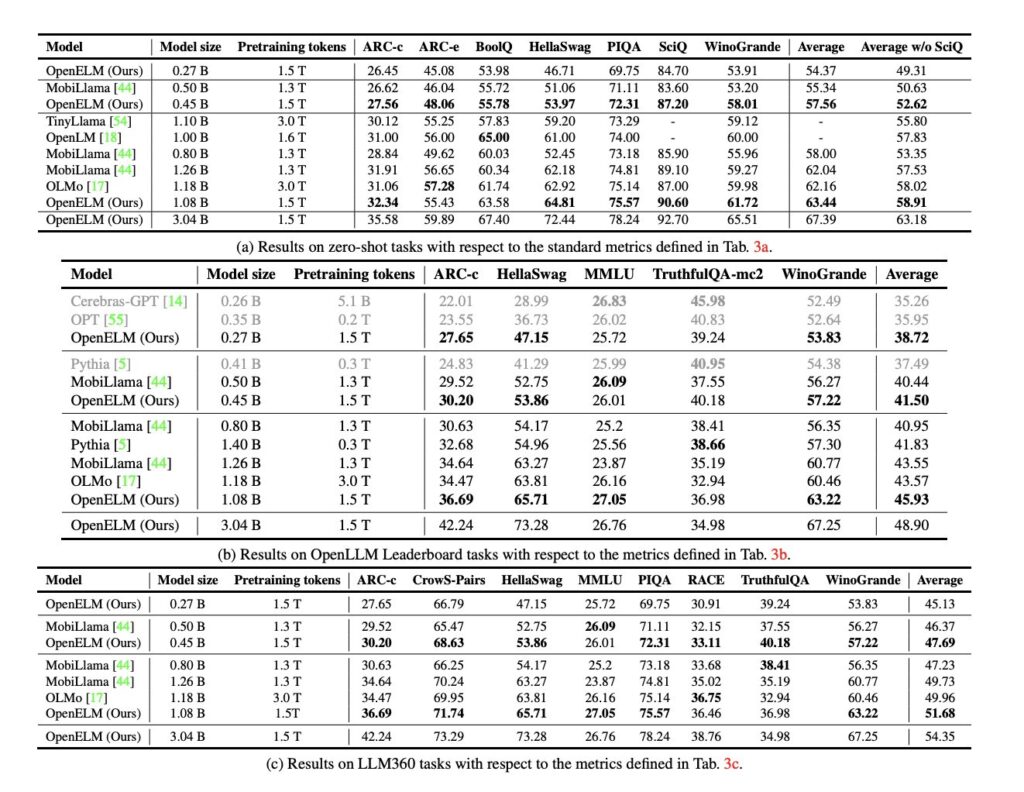

“ We release OpenELM, a state-of-the-art open language model. OpenELM uses a layered scaling strategy to efficiently allocate parameters in each layer of the transformer model, improving its accuracy »details Apple in a research report.

“OpenELM outperforms existing LLMs of comparable size trained on publicly available datasets”. The consumer electronics giant says its model needs half as many pre-training tokens to outperform the competition.

With this more open approach, Apple undoubtedly hopes to aggregate the AI community around OpenELM, and help improve it. The brand also released Corenet, a training library used by its open and closed language models.

OpenELM has every chance of integrating the iPhone with iOS 18, if Apple deems it sufficiently efficient to take off.