As the journalist in charge of this test, I have been using the Apple Vision Pro daily for over a week. I have no doubt about the product's potential, but something bothers me. When I wear it, I am unable to “see up close”. Virtual elements placed 50 centimeters from my face are completely blurred, while what is behind them is completely sharp. How can I explain such a phenomenon, when I have never worn glasses in my life and my vision is supposedly good? To find out, I went to see an ophthalmologist.

A case far from isolated

When I noticed this vision problem, my first instinct was to go to social networks. Despite the product's youth, several similar testimonials exist on X and Reddit. The keywords “Apple Vision Pro Blurry” link to dozens of testimonials from people unable to see up close with the headset, with or without vision problems.

Advertisement

They all wonder in the same way: how is such a phenomenon possible, when the eyes of the Vision Pro wearer remain at a fixed distance from the screens? What determines near and far in virtual reality?

When I returned to France, I had a lot of people try the Apple Vision Pro. If my calculations are correct, I made 30 demos in one week (at the time of writing this article). I quickly adopted a reflex: asking people if they could read what was written on my smartphone. Most say yes, 7 told me they see blurry. New proof that there is a real problem, but that it is not linked to the helmet sensors.

Vergence Accommodation Conflict: Virtual Reality's Biggest Problem

I'm not going to waste any more of your time: the problem with the Apple Vision Pro is called vergence-accomodation conflict (conflict between vergence and accommodation). This is a known phenomenon in virtual reality which is the cause of many problems (notably eye fatigue or the feeling of nausea). All headsets are victims of this, even if I had never suffered it as much as with the Apple headset. It must be said that visionOS is the only operating system that lets the user approach windows so closely, with such good visual rendering that we are supposed to be able to read everything as in reality. Perhaps the others, with their less good quality, manage to hide it.

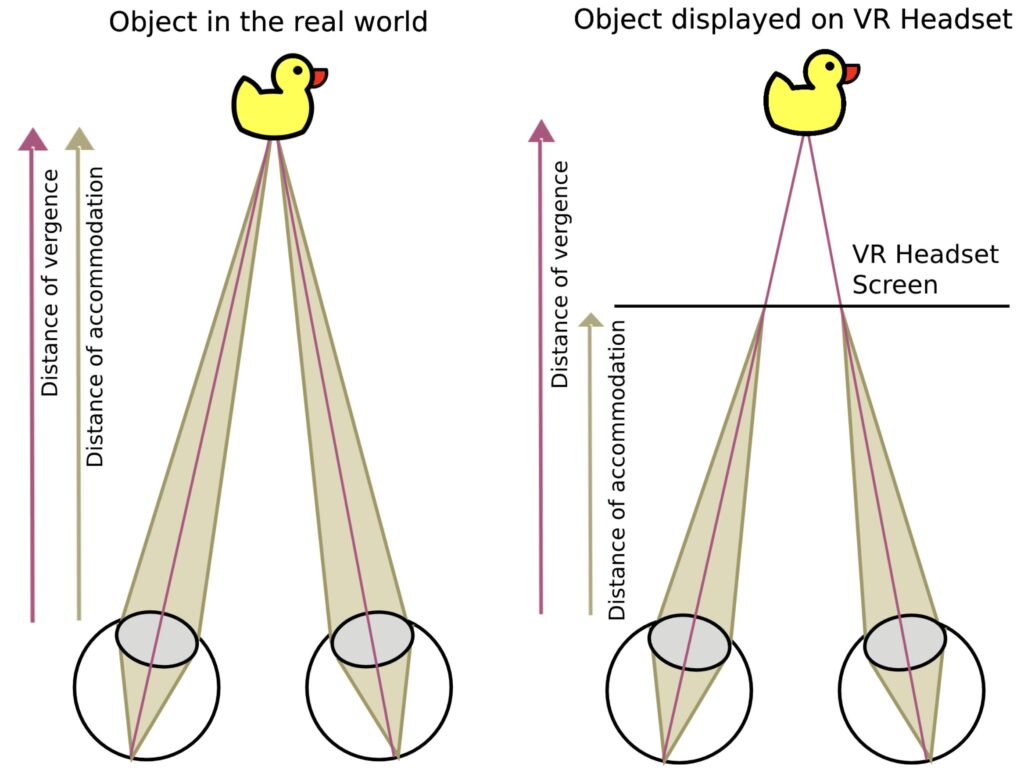

Concretely, the term vergence-accomodation refers to the functioning of the eyes. To better understand what goes wrong in VR, you must already understand how humans work:

Advertisement

- Accommodation: When we look at an object, our eye adjusts the shape of its lens to focus the image on the retina. This ability allows you to clearly see objects at different distances.

- Vergence: It is the coordinated movement of the eyes to direct the gaze towards the same point in space. It is crucial for depth perception and seeing clearly in the real world. In virtual reality, with two screens supposed to replicate 3D, this is obviously essential.

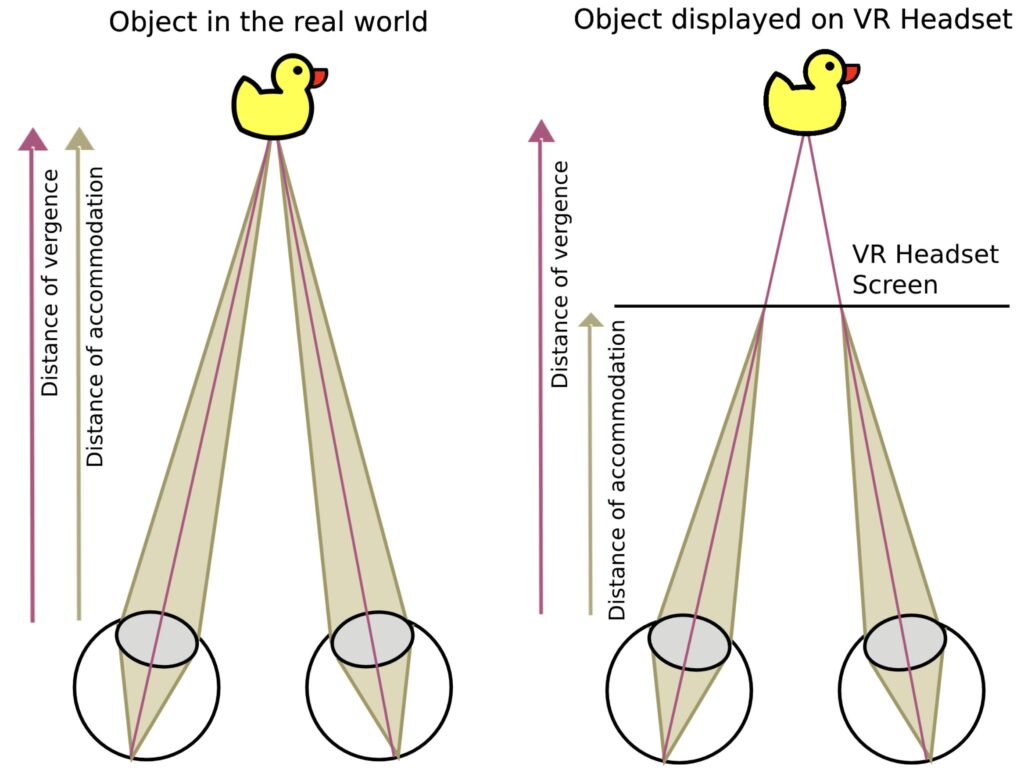

When you wear the Apple Vision Pro, there is no distance. Far and near are simulated, since two screens remain permanently 4 centimeters from the eyes. And the human brain is incapable of understanding what is happening to it.

In virtual reality, the eyes must accommodate the fixed distance of the screens while adjusting their vergence for objects that appear to be at different distances. This disagreement between where the eyes should focus (accommodation) and where they should point (vergence) can lead to blurred perception, especially for “near” objects. Some humans are perfectly capable of seeing clearly everywhere, others cannot, since the eyes do not receive instruction to accommodate this.

Do I have to wear glasses?

For the first time in my life, I see blurry. An extremely destabilizing phenomenon, which prompted me to ask myself many questions.

My first instinct was to buy corrective lenses for near vision from Apple, to attach them to the helmet. I tried three different intensities, with no improvement. This test allowed me to rule out possible undiagnosed hypertrophy or presbyopia.

When I returned to Paris, I made an appointment with an ophthalmologist. First to interview him about the optical risks caused by VR headsets (this will be the subject of another article), then to diagnose my own eyesight.

In my case, the doctor explains to me that he notices an excess of vergence (well), which could be explained by the beginnings of myopia which is still imperceptible in the real world, or a muscular problem. I was prescribed an orthoptic assessment (to improve the coordination of eye movements), as well as lenses with a slight correction, to rest my eyes and prevent them from over-accommodating. If the rehabilitation works, it's possible that my eyes will behave more normally in the Apple Vision Pro, which could “fix” my near vision. But would I need to buy Zeiss lenses for the helmet?

In short, it's because I could lose my distance vision in the future that I can't see well up close in virtual reality today… I might as well tell you that optics is a difficult science to understand.

The Apple Vision Pro will never be a headset suitable for everyone

Will I be able to adjust my vision in the Vision Pro? Maybe, but maybe not. Orthoptics might be enough, glasses might be obligatory. In any case, do I want to wear corrective lenses in a VR headset when I usually see well? My geek soul might say yes, but how can I convince other users?

This vagueness taught me one thing: it is not enough to integrate as many innovative technologies as possible to solve all the problems of VR. Apple, like all the others, faces major optical challenges, which will never be able to correspond to all users. There are several projects to address VAC (Vergence Accommodation Conflict), but none are mature enough to be adopted by Apple. I am thinking in particular of variable focal length lenses or screens equipped with a motor to move forward and backward, which would aim to indicate to the brain when to adjust the shape of the lens. Meta is working on prototypes with similar technology… but warns that it will take several years to get there.

Regardless, if you want to try or equip yourself with the Apple Vision Pro one day, be prepared to not necessarily see everything. It's frustrating when you know that others can see clearly, but there's nothing you can do about it. When tech meets optics, there are a few hiccups.