In this article from The Conversation, the process of creating images by artificial intelligence systems like Dall-E or Midjourney is explained. There is no magic, but algorithms and processes for destroying and reconstructing images.

From a victory against the best human Go players, for example, or more recently, forecasting the weather with precision never before achieved, advances in AI continue and continue to surprise. An even more disconcerting result is the generation of images of striking realism, fueling a certain confusion between the true and the false. But how are these images generated automatically?

Advertisement

Image generation models are based on deep learning, that is to say very large neural networks that can reach several billion parameters. A neural network can be considered as a function that associates input data with output predictions. This function is composed of a set of parameters (numerical values) initially random that the network will learn to set by learning.

To give an order of magnitude, the model Stable Diffusioncapable of generating realistic images is made up of 8 billion parameters and its training cost 600,000 dollars.

These parameters must be learned. To explain their learning, we can focus on the simpler case of object detection from images. An image is presented as input to the network and it must predict possible object labels (car, person, cat, etc.) as output.

The learning then consists of finding a good combination of parameters allowing the objects present in the images to be predicted as correctly as possible. The quality of learning will mainly depend on the amount of labeled data, the size of the models and the available computing power.

Advertisement

In the case of image generation, it is in a way the opposite process that we want to do: from a text describing a scene, the output of the model is expected to create an image corresponding to this description, which is considerably more complex than predicting a label.

Destroy images to then create new ones

First, let's forget about the text and focus on the image alone. If generating an image is a complex process even for a human being, destroying an image (the opposite problem) is a trivial problem. Concretely, from an image composed of pixels, changing the color of certain pixels randomly constitutes a simple method of alteration.

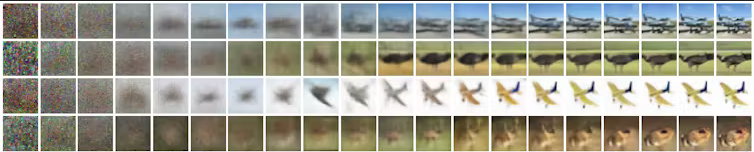

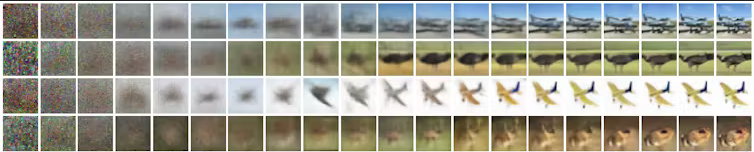

We can present a slightly altered image as input to a neural network and ask it to predict the original image as output. We can then train the model to learn to denoise images, which represents a first step towards image generation. Thus, if we start from a highly noisy image and repeat the call to the model sequentially, we will obtain with each call an image that is less and less noisy until we obtain a completely denoised image.

If we exaggerate the process, we could then start from an image composed only of noise (a snow of random pixels), in other words an image of nothing and repeat the calls to our “denoiser” model in order to arrive at an image like shown below:

We then have a process capable of generating images, but of limited interest, because depending on the random noise, it can after several iterations end up generating anything as an output image. So we need a method to guide the denoising process and it is text that will be used for this task.

Go from noise to final image

For the denoising process, we need images, these come from the Internet and allow us to constitute the training dataset. For the text necessary to guide the denoising, simply the captions of the images found on the Internet are used. Alongside learning to denoise the image, a network representing the text is associated. So, when the model learns to denoise an image, it also learns which words this denoising is associated with. Once the training is completed, we obtain a model which, from a descriptive text and a total noise, will, by successive iteration, eliminate the noise to converge towards an image sticking to the textual description.

The process eliminates the need for specific manual labeling. It feeds on millions of images associated with their captions already present on the web. Finally, a picture worth a thousand words, as an example, the image above is generated from the following text: “fried egg flowers in the bacon garden” by the Stable Diffusion model.

Christophe RodriguesTeacher-researcher in computer science, Leonardo da Vinci Center

This article is republished from The Conversation under Creative Commons license. Read theoriginal article.